Hello everyone !

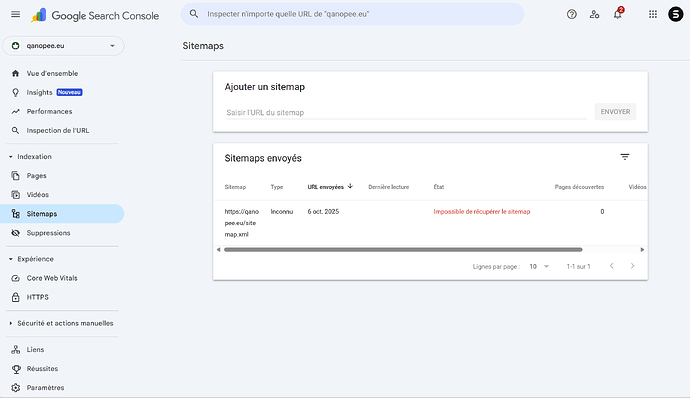

For several weeks now, I have been experiencing indexing issues with Google Search Console.

It all started a year ago when I published my website qanopee.eu with a redirect to www (the website appears to visitors as www.qanopee.eu). The sitemap did not include the www subdomain, but that didn’t matter to me because the www.qanopee.eu version appeared in the Google index, and that was enough for me. I didn’t try to change the sitemap or manually submit all the URLs to Google.

Recently, I wanted to improve my website’s presence in the index, and the simplest solution to the indexing problems seemed to be to remove the redirection system, which I did. The URLs in the sitemap now match the addresses that should appear in the index and no longer cause redirection. However, when I enter the sitemap URL in Google Search Console, it tells me : Unable to get the sitemap (whereas Bing Webmaster Tools does not display this error).

Does anyone know what might be causing this?

Thanks in advance!

Adrien

Hi @Adrien_Uriel

Here’s a quick self-check list that usually fixes the “Unable to get sitemap” issue:

Open your sitemap URL in a browser — it should load XML directly (no redirect or HTML page).

Open your sitemap URL in a browser — it should load XML directly (no redirect or HTML page).

Check it returns 200 OK and

Check it returns 200 OK and Content-Type: application/xml.

All URLs inside should match the same domain you verified in Search Console (

All URLs inside should match the same domain you verified in Search Console (www or not).

If you use Cloudflare or similar, make sure Googlebot isn’t blocked from that file.

If you use Cloudflare or similar, make sure Googlebot isn’t blocked from that file.

If all that looks good and it still fails, share the sitemap URL — I can check the headers and tell you exactly what’s wrong.

1 Like

Hi @Kalisperakichris,

Thank you for your answer.

So here are the element you asked me to check :

- The sitemap load as XML file and not HTML file.

- The page returns HTTP 304 (since this morning, yesterday it was 200 OK).

I don’t see Content-type in the http request header but I have Accept in the inspector with the value text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7.

- All the URLs are matching the verified domain in GSC (

qanopee.eu without subdomains).

- I don’t use Cloudfare or a similar system which could block Googlebot.

Perhaps the problem stems from the HTTP 304 ? If so, do you know how to resolve this?

Here is the sitemap link : https://qanopee.eu/sitemap.xml

Otherwise, do you have any idea what might be causing the problem?

Thank you very much for your help !

Adrien

Hi Adrien

Thanks for checking all that — super helpful details.

The HTTP 304 (“Not Modified”) is likely what’s confusing Search Console. Googlebot expects a direct 200 OK with the XML body when fetching a sitemap; a cached 304 response can make it fail to parse.

Here’s what to do:

Try to serve the sitemap with a fresh 200 OK response — disable or clear any server/page-cache rule that returns 304 for

Try to serve the sitemap with a fresh 200 OK response — disable or clear any server/page-cache rule that returns 304 for sitemap.xml.

If your CMS or host uses conditional caching (ETag / Last-Modified headers), you can temporarily disable them just for that file or force the server to always send the XML content.

If your CMS or host uses conditional caching (ETag / Last-Modified headers), you can temporarily disable them just for that file or force the server to always send the XML content.

Then re-fetch the sitemap in Search Console (“Inspect URL → Fetch live”).

Then re-fetch the sitemap in Search Console (“Inspect URL → Fetch live”).

If that fixes it, you can re-enable normal caching later — the key is that Google should always see the full XML (not a 304) when it checks your sitemap.

Everything else you listed looks perfect — same domain, valid XML, no blocking system. This 304 behaviour is almost certainly the root cause.

Hi @Kalisperakichris,

So I learned that http 304 is used to not reload the content which is already in cache on my computer.

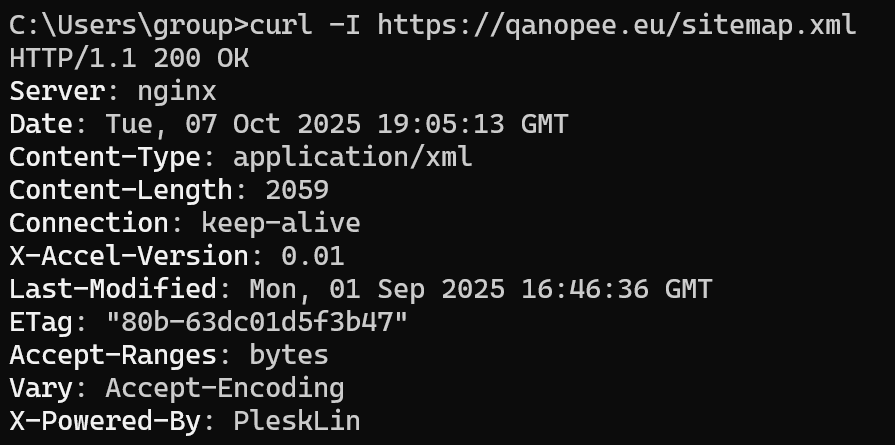

I tried with my command prompt and I got that:

So there is no issue with the http answer, which is already 200 OK.

The problem must be somewhere else… Any idea?

Thank you !

Adrien